The Challenge

In today’s software testing landscape, organizations face a critical disconnect: while AI models have made tremendous strides in capabilities, they remain isolated from the vital data and systems that power testing processes. This creates several fundamental challenges:

| Challenge | Description |

|---|---|

| Information Silos | Testing data is scattered across various systems - requirements in Jira, code in Git, test cases in test management tools, and execution results in CI/CD platforms. AI assistants struggle to access this fragmented information effectively. |

| Integration Overhead | Every new testing tool or data source requires its own custom integration with AI systems, creating an unsustainable web of connectors that’s difficult to maintain and scale. |

| Context Switching | As testers move between different tools and phases of testing, AI assistants lose context, requiring repeated explanation of project details and testing requirements. |

| Data Security | Organizations need to maintain control over sensitive testing data while still making it accessible to AI assistants for analysis and automation. |

| Skills Gap | Teams often lack expertise in both testing and AI, making it challenging to implement and maintain AI-powered testing solutions. |

| Real-time Adaptation | AI systems struggle to adapt to rapid changes in application architecture, test requirements, or business rules without manual intervention. |

The Solution

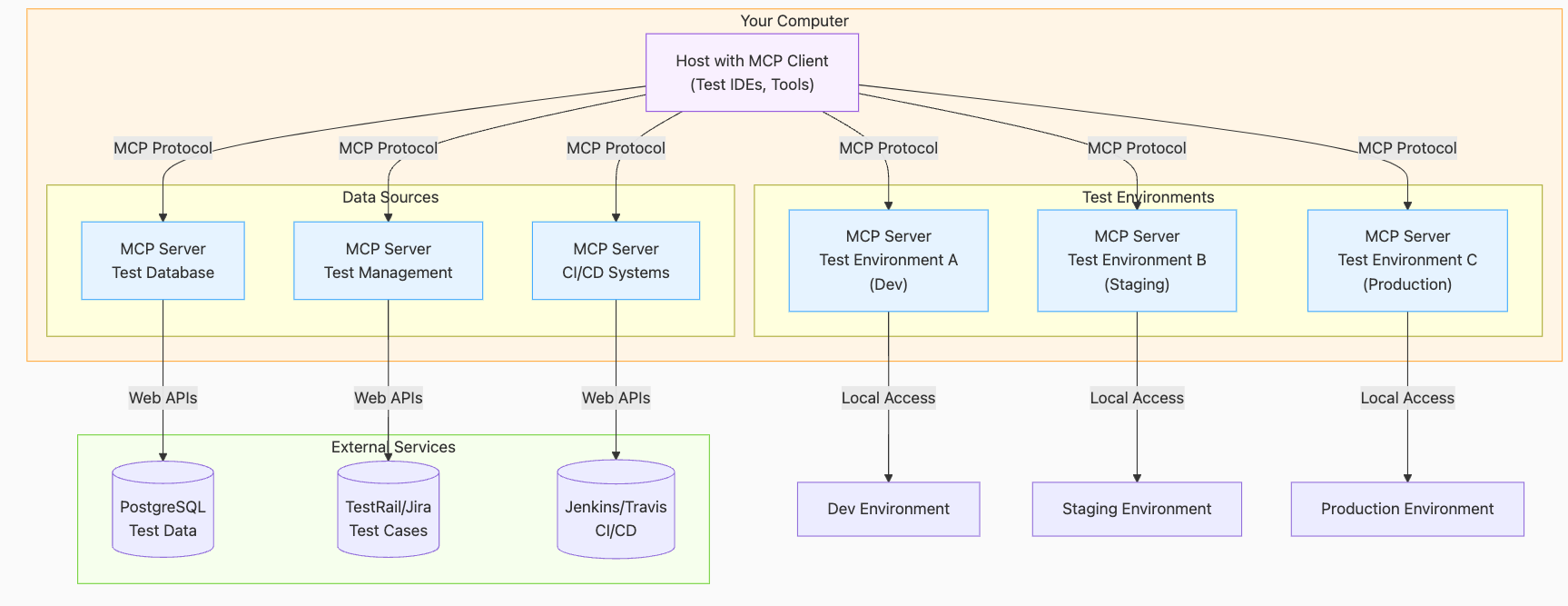

Model Context Protocol (MCP) introduces a universal standard for connecting AI systems with testing data sources and tools.

Instead of building custom integrations for each tool, MCP provides a standardized way to connect AI assistants with testing environments through a simple yet powerful protocol.

MCP in Testing

Here’s a practical approach to leveraging MCP in the testing lifecycle to enhance AI assistant effectiveness:

- Hosts running MCP Clients can be IDEs or AI Tools that need to access data from MCP Servers

- MCP Servers are lightweight programs that expose data from specific sources (e.g. Git, Jira, etc.) or external data sources

MCP Clients can be implemented in any language supporting TCP/IP connections, making it highly flexible

Example of MCP Server:

from mcp.server.fastmcp import FastMCP

from mcp.types import Resource

import httpx

mcp = FastMCP("TestAutomation")

@mcp.tool()

async def fetch_test_results(test_id: str) -> Resource:

"""Fetch test execution results for a specific test"""

async with httpx.AsyncClient() as client:

# Example API call to test results endpoint

response = await client.get(f"https://test-api.example.com/results/{test_id}")

test_data = response.json()

# Create a prompt as a single string

story = f"""You are a test results analyzer with expertise in quality assessment.

Your task is to analyze the provided test execution data and transform it into an insightful summary (2-3 paragraphs) that captures:

- Overall test status and metrics

- Key findings and potential issues

- Recommendations for improvement

API Response: {test_data}"""

return story

@mcp.tool()

async def review_test_plan(project_id: str) -> Resource:

"""Review and provide feedback on a test plan"""

async with httpx.AsyncClient() as client:

# Example API call to test plan endpoint

response = await client.get(f"https://testplan-api.example.com/plans/{project_id}")

plan_data = response.json()

# Create a prompt for test plan review

review = f"""You are a senior test strategist with extensive experience in test planning.

Your task is to review the provided test plan and offer expert feedback (2-3 paragraphs) covering:

- Completeness and effectiveness of the test strategy

- Risk assessment and mitigation approaches

- Resource allocation and timeline considerations

Test Plan Details: {plan_data}"""

return review

- the

fetch_test_resultsandreview_test_planare just examples, we can have as many tools as we want

Your IDE with MCP Client will be able to use these tools to help you with your testing activities. One prompt example could be:

I need to test the following feature:

- Feature: User can create a new account

- Scenario: User creates a new account with valid credentials

- Expected Result: Account is created successfully

The MCP Client will be able to use the review_test_plan tool to review the test plan and provide feedback.

The entire interaction can be done directly in the IDE, without the need to switch between different tools.

The MCP Client will be able to use the fetch_test_results tool to fetch the test results and provide feedback.

Key Components

Standardized Connectivity

- MCP Servers: Testing tools can expose their data through MCP servers, creating a consistent interface for AI assistants to access testing information

- MCP Clients: Testing tools can build AI applications that connect to these servers, maintaining context across different testing phases

Pre-built Integrations

Ready-to-use connections with essential testing tools (more here):

- Git repositories for test code management

- Jira for requirements tracking

- Postgres for test results storage

- CI/CD systems for test execution

Secure Data Access

- Organizations maintain control over their testing data within their infrastructure

- Standardized security practices for AI assistant interactions

- Clear protocols for data access and permissions

Context Preservation

- AI assistants maintain context as testers move between different tools and phases

- Consistent understanding of testing requirements and constraints across tools

- Seamless transfer of context between different testing activities

The Results

Enhanced AI Assistant Effectiveness

- Better understanding of testing context and requirements

- More relevant and accurate testing suggestions

- Improved code generation and test automation capabilities

Streamlined Testing Workflow

- Seamless movement between different testing tools

- Reduced context switching overhead

- More efficient collaboration between testers and AI assistants

Sustainable Testing Architecture

- Single protocol replaces multiple custom integrations

- Easier adoption of new testing tools and AI capabilities

- Reduced maintenance overhead for integrations

Improved Testing Quality

- More comprehensive test coverage through better context understanding

- Faster identification of testing gaps and issues

- More effective test automation and maintenance

Looking Ahead

MCP represents a fundamental shift in how AI assistants interact with testing environments. By providing a universal standard for data connectivity, it enables AI systems to better understand and support testing activities while maintaining security and control.

As more testing tools adopt MCP and the ecosystem of pre-built integrations grows, we’ll see increasingly sophisticated AI-powered testing capabilities. Instead of maintaining fragmented integrations, testing teams can focus on leveraging AI to improve test quality and efficiency.

The future of testing lies in connected systems that can seamlessly share context and information. MCP provides the foundation for this future, enabling AI assistants to become truly effective partners in the testing process while maintaining the security and control that organizations require.